Tencent Hunyuan3D World Model 1.0 — Open-Source AI for Text & Image to Immersive 3D World Generation.

Tencent Hunyuan3D World Model 1.0 — Open-Source AI for Text & Image to Immersive 3D World Generation

Published on July 30, 2025

Thank you for reading this post, don't forget to subscribe!🚀 Introduction

Generative AI has ushered in an era where full 3D worlds can now be created from mere text or image prompts. Tencent’s Hunyuan3D World Model 1.0, revealed on July 26, 2025, at WAIC Shanghai, marks a pivotal moment—becoming the first open-source, explorable, and simulation-capable AI model for whole-world generation.

What Is Hunyuan3D World Model 1.0?

At its core, HunyuanWorld-1.0 is a generative framework that seamlessly transforms a simple text description or single image into a fully explorable, interactive 3D scene, composed of layered meshes and exported for use in tools like Unity, Unreal Engine, and Blender.

Key Highlights:

- 360° immersive panoramas as world proxies, reconstructed into real 3D geometry.

- Semantic hierarchical scene decomposition, allowing object separation and layered editing.

- Editable meshes with disentangled foreground/background semantics—supporting simulation or interactivity.

-

A collection of diverse 3D worlds created by Tencent’s HunyuanWorld 1.0 AI model

📐 Technical Architecture: How It Works

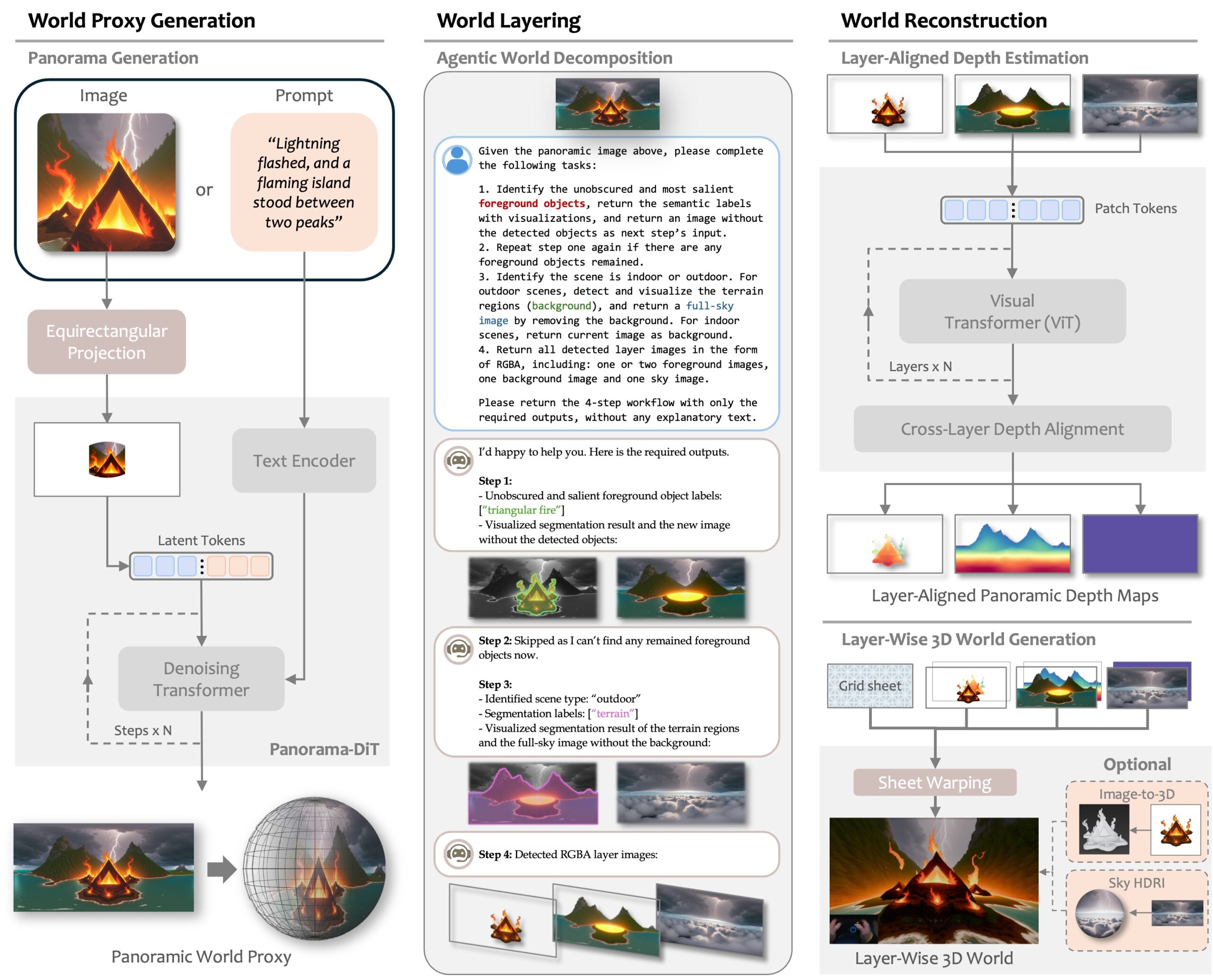

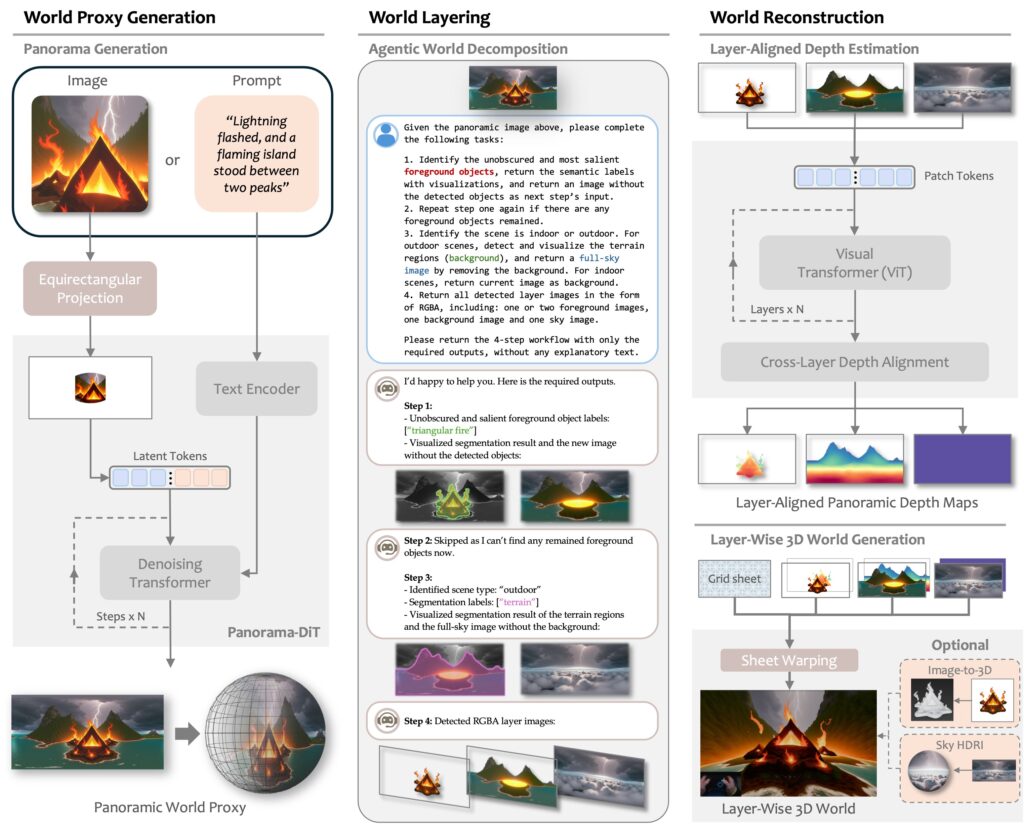

HunyuanWorld-1.0 operates on a multi-stage pipeline:

- Panorama Generation: A diffusion-based module (

Panorama-DiT) synthesizes a full 360° panoramic image from input prompts or images—capturing environment, objects, lighting, and terrain patterns. - Semantic Layering: The panorama is automatically segmented into semantic layers—sky, ground, background scenery, and foreground elements. This supports logical separation during reconstruction and editing.

- Hierarchical Reconstruction: A multi-stage reconstruction network then converts the layered proxy into mesh geometry, converting panorama textures into real-world PBR (Physically Based Rendering) material maps, with clean topology and support for mesh export.

Hunyuan3D-1.0 offers a lite version (~10 seconds) for quick mesh generation from a single image and a standard version (~25 seconds) for higher fidelity. Both efficiently unify text-to-world and image-to-world flows.

📊 Performance & Benchmarking

Tencent’s extensive evaluations show that HunyuanWorld-1.0 consistently outperforms competing open-source approaches across various metrics, showcasing superior visual realism and texture consistency.

| Generation Mode | BRISQUE (↓) | NIQE (↓) | Q-Align (↑) | CLIP-T / CLIP-I (↑) |

|---|---|---|---|---|

| Text-to-world | 34.6 | 4.3 | 4.2 | 24.0 |

| Image-to-world | 36.2 | 4.6 | 3.98 | 4.5 |

These compare starkly against baseline systems like LayerPano3D, MVDiffusion, and Diffusion360.

🔧 Built on Hunyuan3D 2.x Ecosystem

While World Model 1.0 is optimized for full scene generation, it builds atop the powerful Hunyuan3D 2.x asset models:

- Hunyuan3D-2.0: Introduced multi-view diffusion plus a texture synthesis pipeline, optimized for higher resolution 3D assets.

- Hunyuan3D-2.5: Launched June 2025 with a LATTICE shape generator (~10B parameters) and advanced PBR texture generation—setting new benchmarks in shape fidelity and material realism.

Together, they form the underlying backbone for mesh accuracy and visual richness in world generation pipelines.

🎮 Real-World Use Cases

- Game Development & VR: Rapid creation of level prototypes or environmental scenes from concept text (“desert oasis at dusk”). Seamless export into engines like Unity or Unreal.

- Digital Art & Virtual Production: Concept artists can generate full immersive worlds from simple prompts. Use editable mesh layers for compositing, retopology, or lighting.

- Education & Simulation: Interactive 3D environments ideal for virtual labs, training simulations, or educational VR content. Layered object separation enables physics simulation.

⚠️ Limitations & Considerations

It’s important to be aware of the model’s current limitations:

- Mesh Complexity: Generated topology can be heavy (hundreds of thousands of triangles). This requires retopology for real-time or AAA usage.

- Depth Realism: Some users report depth feels skybox-like—interactive only at surface layers rather than full volumetric depth.

- Texture Detail: Fidelity may depend on prompt resolution and world complexity.

- Static Scenes: Worlds are generated as static meshes; dynamic behavior must be added manually post-export.

🧠 Why It Matters

HunyuanWorld-1.0 is a significant leap forward because it is the first truly open-source world-scale generation model, bridging 2D and 3D workflows and accelerating the democratization of generative 3D tools. By open-sourcing it, Tencent is reinforcing Chinese AI leadership globally in 3D AIGC.

🧪 Getting Started

- Download: Clone and explore on GitHub (project released July 26, 2025).

- Install: Dependencies include Python 3.9–3.12, PyTorch, CUDA support, and optional tools like PyTorch3D and NVlabs dust3r.

- Run: Use

demo_panogen.pyordemo_scenegen.pyto generate world scenes from text or images. - Export: Outputs include

.OBJ/.FBXmeshes, layer metadata, and PBR texture maps.

⚙️ Prompting & Workflow Tips

For best results, use descriptive prompts including style, time of day, mood, and foreground presence (“lush mountainous temple ruins at sunset with mist”). You can also combine image input with a text prompt. Post-process meshes by simplifying and retopologizing them for optimal real-time performance using tools like Blender.

1. Prompt Engineering for Believable Immersive Worlds

The key to creating high-quality, realistic, and immersive 3D worlds with generative AI models like Hunyuan3D lies in effective prompt engineering.

- Start Clear and Specific: Begin with a concise idea.

Less Effective: “Forest”

More Effective: “A dense, tropical rainforest” - Add Detail and Descriptive Language: Use sensory details, time of day, and mood.

“An ancient, mystical forest bathed in soft morning light, with colossal, moss-covered trees and a misty river flowing through. There’s a serene and magical feel in the air.”

- Utilize Negative Prompts: Specify things you don’t want to see.

Prompt: “A serene, mountainous lake view, clear blue water”

Negative Prompt: “blurry, dirty, urban, cluttered, blotches” - Include Style and Artist References: “A futuristic cyberpunk city scene, in the style of the Blade Runner film.”

- Iterate and Refine: Experiment with different prompts and analyze the results.

- Combine Image Input with Text: Provide a reference image and a text prompt for specific instructions.

2. Mesh Optimization & Retopology Workflows

The 3D worlds generated are often highly detailed. For game development or VR, it’s crucial to optimize these meshes for performance.

- Evaluate the Mesh: Check its polygon count and topology in a 3D software like Blender.

- Polygon Reduction (Decimation): Reduce the number of polygons while preserving detail using tools like Blender’s Decimate Modifier.

- Retopology: Create a new, cleaner, and more efficient polygon layout that closely follows the shape of the original mesh. This is essential for animation and detailed texturing.

- UV Unwrapping & Texture Maps: Create UV maps for the optimized mesh and apply the exported PBR texture maps (Albedo, Normal, Roughness, Metallic, etc.).

- Export and Integration: Export the optimized mesh as an

.FBXor.OBJfile and import it into your game engine or software.

3. Integration with ComfyUI/End-to-End Pipelines

Integrating the Hunyuan3D output into node-based interfaces like ComfyUI or traditional 3D production workflows can streamline your process.

Integration with ComfyUI:

You can generate the world first, then import a rendered image or panorama into ComfyUI to leverage its powerful upscaling, inpainting, and style transfer capabilities.

Integration with End-to-End 3D Pipelines:

This involves generating the world in Hunyuan3D, exporting the mesh and PBR maps, importing them into your chosen 3D software (e.g., Blender) or game engine (Unity/Unreal), applying materials, optimizing the mesh, and then adding lighting, physics, and gameplay logic.

📂 Appendix: Quick Reference Table

| Feature | Description |

|---|---|

| Release Date | July 26, 2025 |

| Input Types | Text prompt and/or single reference image |

| Output Mode | 360° panorama → semantic layers → editable mesh |

| Mesh Export Formats | .OBJ, .FBX, PBR-supported |

| Generation Speed | Lite: ~10 seconds; Standard: ~25 seconds |

| Compatible Engines | Unity, Unreal Engine, Blender |

| Limitations | High mesh density, static depth, occasional skybox effect |

| Ideal Use Cases | Game dev, VR prototyping, digital art, educational simulation |

| Based On Hunyuan3D Version | Built on Hunyuan3D 2.0 → 2.5 pipeline |

FAQ (Frequently Asked Questions)

Q: What is the Hunyuan3D World Model 1.0?

A: It is the first open-source, explorable, and simulation-capable AI model for whole-world generation. It was developed by Tencent and can transform a simple text description or single image into a fully explorable, interactive 3D scene.

Q: What is the release date of the model?

A: The Hunyuan3D World Model 1.0 was revealed and released on July 26, 2025, at WAIC Shanghai.

Q: How does Hunyuan3D World Model 1.0 work?

A: The process is a multi-stage pipeline: Panorama Generation from a prompt, Semantic Layering of the panorama, and Hierarchical Reconstruction to convert it into a detailed mesh geometry with PBR material maps.

Q: What are the supported input and output formats?

A: The model supports both text prompts and single reference images as input. The outputs include .OBJ and .FBX meshes, layer metadata, and PBR texture maps.

Q: What are the main limitations of the model?

A: Current limitations include high mesh complexity (requiring retopology for real-time use), “skybox-like” depth realism, dependence of texture quality on prompt resolution, and the fact that worlds are generated as static meshes.

Q: How can I get started with the Hunyuan3D World Model 1.0?

A: You can get started by cloning the project from its GitHub repository, which was released on July 26, 2025. The repository includes demo scripts (demo_panogen.py and demo_scenegen.py) to generate scenes from text or images.